Process data programatically

Rakam provides different ways to process and analyze the data for different use-cases.

1. Webhook Integration

If you want to consume the data in your external API, you can enable Rakam API to send the data to external systems in micro-batches. The number of events in micro-batches is equal to number of events the server processed in the last minute. Here are the configs that you need to enable:

collection.webhook.url=https://yourapi.com/callback

collection.webhook.headers={"customheader":"test"} # enable if you want to set additional request headers

For each minute, we will send all the events inside a HTTP request to https://yourapi.com/callback endpoint. Here is a sample request:

{

"activities":[

{

"collection":"add_to_basket",

"properties":{

"product_id":"123",

"_time":"2020-11-09T07:51:49.053Z",

"_user":"test"

}

},

{

"collection":"complete_order",

"properties":{

"product_id":"123",

"_time":"2020-11-09T07:52:49.053Z",

"_user":"test"

}

}

]

}

Your API must return a response with status code 200. If not, the server will retry the request with a backoff and fail after 4 subsequent requests.

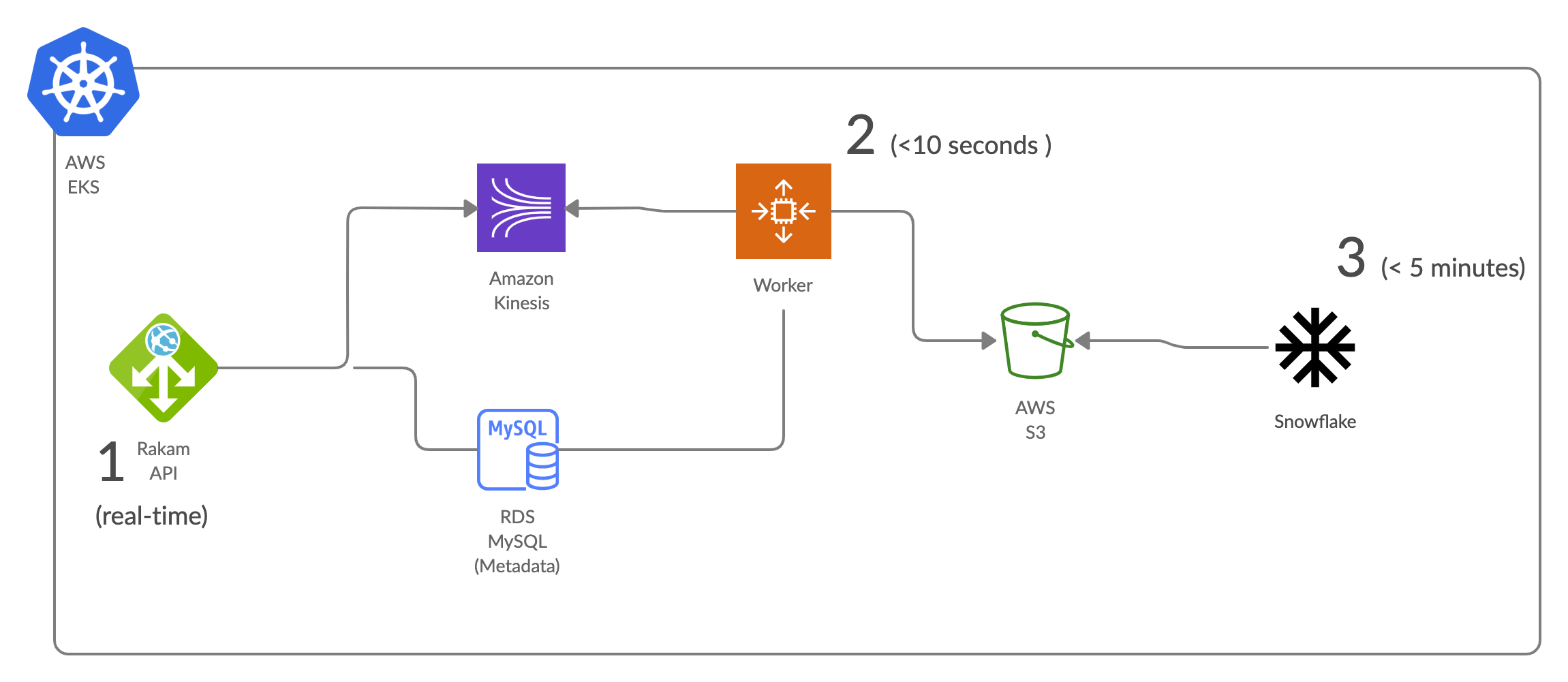

Processing the data via the worker

Here open-sourced our data ingestion engine if you would like to consume the data from Kinesis & Kafka. If you would like to transform, process the data programmatically using the checkpoint mechanism, you can write a custom target connector. You can find the documentation in README of the project.

Updated over 5 years ago